Where is the AI?

Artificial intelligence (AI) is all around us, it powers the helpful voice on my phone and it’s in the digital assistant on my kitchen counter. Actually, I have to admit liking to say “Alexa turn on Christmas” to turn my Christmas lights on and off. It’s just a simple end-point computer, like a terminal, communicating with a cloud-based service which does all the hard work of interpreting what I say and figuring out what to do.

Many AI systems are not as obvious as Alexa, they surround us, yet we don’t see them. Take the ads on my Facebook feed, for example, an algorithm is figuring out what it knows about me and then what ads will likely work best. Even with Google, what appears to be just a search box is much smarter. If you ask the question “What is the population of Canada,” Google is not just searching documents using its famous PageRank algorithm, it’s doing much more. It’s figuring out that an infographic is the best way to communicate the population of Canada to me and showing this alongside its other insights. It also knows flight numbers and does different things depending on context.

What we think is a simple search is much more. AI is sometimes quite subtle and helping us in ways we may not realize.

Good experience design often makes our little AI helpers invisible to us. Two of the ten Dieter Rams principles of good design are, “Good design is unobtrusive” and “Good design is as little design as possible.” We can see why subtle or invisible AI happens; it is considered good design.

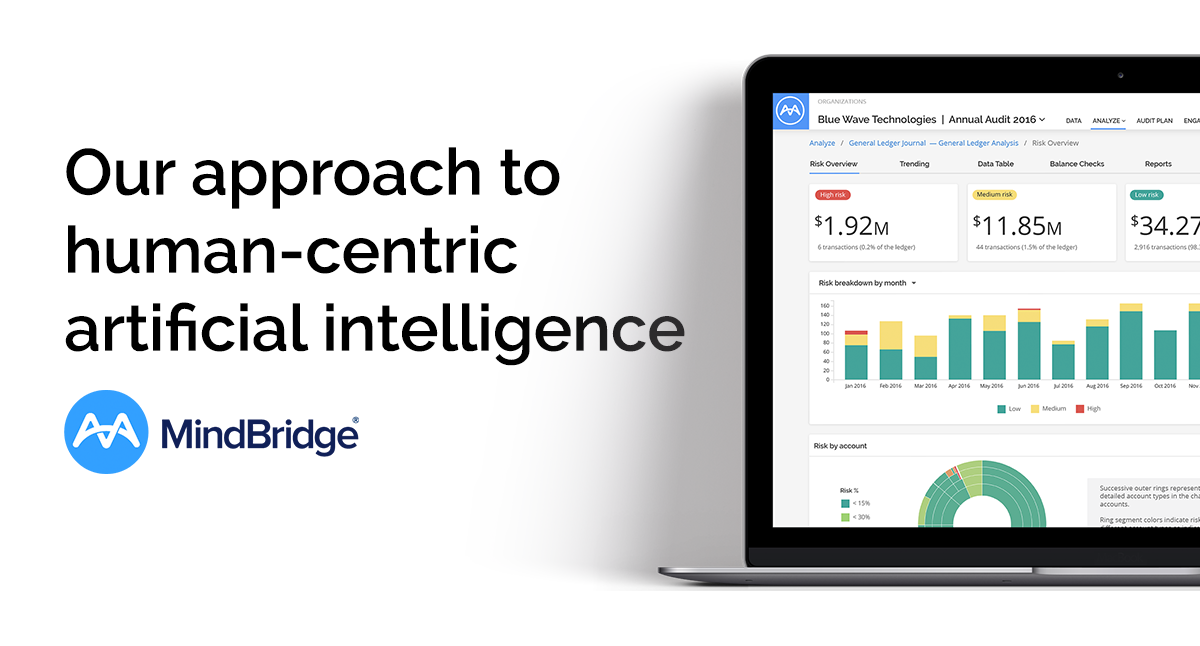

Does MindBridge hide its AI?

We have a philosophy that when our AI provides insight or direction to users, we give them the feedback they need to both see it and understand it. We believe in human-centric AI, which means the human is the central part of the system and they should be able to understand what the AI is telling them and have explanations at each stage. The AI needs to communicate and therefore, being visible is an essential element in the trust relationship we are endeavoring to create.

Having said that, sometimes we can’t help ourselves and occasionally we make the experience seamless and require users to click on little information tabs to find out more. This is a design principle called ‘progressive disclosure’ and allows a user to select the level of detail they want.

So where is our AI? How do you know it’s there and working? Let’s take three examples from our AI Auditor product and walk through the techniques and the design considerations.

#1 Unobtrusive but verifiable

Auditors often have to classify items in audit tools manually. They may need to say what kind of money is held in a certain type of account, whether it’s a cash asset, a liability, or maybe a non-capital expense. This process of instructing a software tool in what something means is laborious and repetitive. I think it’s fair to say nobody wants to do it but it’s required to get an accurate view of the finances. This is a great candidate for automation with AI.

MindBridge has a built-in account classifier that uses the human-readable label on financial accounts to determine what kind of account it actually is. This is a form of language processing and we use two methods, the first is a simple search which works well for well-labelled accounts, the second is a Neural Network Classifier which learns how people classify accounts. The net effect (excuse the pun ☺) is that most users of MindBridge spend little to no time telling our system what an account is. It just knows. We do recommend, however, that users review its findings to confirm or correct them. Our AI also learns from these interactions.

This is what it looks like as its working: It appears to be loading data, pretty unobtrusive and just doing its thing.This is what it looks like when the user verifies the outcome. The user has the option to change the classification of the account. This is the only real clue that something smart has just happened.You could be forgiven for not noticing that a lot of work is happening but there are some real time savings here. Below are some charts of simple text search methods vs. a hybrid of text search and AI together. On simple and well-labelled accounting structures, the accuracy of a text search is indistinguishable from an AI. But as we get a little more complex, we see big wins. Further, as the complexity grows to involve a massive organization’s accounts, you see that the simple text search accuracy breaks down and doesn’t cope at all. Conversely, the AI method keeps on punching through the problem and gets it done. The time savings at the complex level is huge; we are talking hours, if not days, of human time saved in laborious activities.

#2 Search that tells you what it understands and gives you options

The MindBridge search interface is a little different than what you’re used to, as we want everything to be understandable and explicable even at the level of a search box. Have you ever typed a search into Google and not got the results you wanted? Chances are you ended up not scrolling to page 2, typed in a slightly different question, and got what you wanted by trial and error.

At MindBridge, we value the AI being visible and explaining itself so that our users can figure out what part of the question is driving the view of data. Here we see a search user interface where the user types their query. There is no AI yet.The user hits go! The AI system parses the language and uses natural language processing (NLP) techniques to unpack what is being requested. Our NLP AI understands language in general but also common accounting terminology. It highlights the important terms in the query and filters the transaction list accordingly.Note that the highlights are clickable so that a user can determine other possible paths and verify that the AI has understood the question. It also understands complex semantics like conjunctions, which are combinations of terms such as AND, OR, or NOT logical expressions. This allows more complex questions to be posed and answered.

In this way, MindBridge users can not only search vast amounts of transaction data for specific scenarios, they can do this without writing an SQL query or using similar technical languages. The AI is effectively reading back their query to them to help in the understanding of what’s driving the results and showing other possibilities. This user interface is very artful as it provides both progressive disclosure and explainable AI, all in a search box.

For transparency, MindBridge has filed a patent for methods used in this search interface. We believe in ‘AI for Good’ and human-centric AI and we use patent protection to ensure the freedom to do the work we do.

#3 Ensemble AI

Ensemble AI is the main event at MindBridge and it guides much of our work. We consider its primary role to be a focusing function for people and, as we specialize in finding insights and irregularities in financial data, it allows us to do this in a robust and explainable way.

So how does Ensemble AI work?

First, we need to understand that the ensemble is not just one method or algorithm but many. It’s like having a panel of experts with different types of knowledge and asking each of them what they think about a given transaction or element of data. The system then combines all the insights from the individual algorithms together.

For example, AI Auditor includes standard audit checks, so some of these “experts” are following simple audit rules while others follow advanced AI techniques and algorithms. The point of the ensemble model is that they all work together like an orchestra and, as the user is the conductor of the orchestra, they can select what’s important to them and the combination of results from the ensemble is presented in an easy to follow way.

Here’s an example of one of the detailed views of the ensemble at work (click to enlarge). You see all the little rectangles which have the larger red or green highlights, these are the individual AI capabilities in the ensemble.Let’s dig deeper into two of these capabilities.

Expert score

One example of an AI method we use is an ‘Expert System.’ This is a classical AI method that draws on the knowledge of real-world accounting practice to identify unusual transactions.

How do we capture real-world knowledge? We work closely with audit professionals and quiz them with surveys and specific questions about risky transactions, allowing us to construct an expert system that knows hundreds of account interactions and their associated concerns. We can run this method very quickly on large amounts of data, allowing us to scale human knowledge and highlight issues that a human user looking at a small sample could easily miss.

Rare flows

Ensemble AI can also identify unusual things using empirical methods. This leverages the science of what is usual or unusual, such as another method we use called ‘Rare flows.’ This part of Ensemble AI is a method of unsupervised learning from a family of algorithms known as outlier detection. The nice thing about unsupervised learning algorithms is they bring no bias, they simply identify what’s in the data and thus let the data speak for itself.

The purpose of this method is to uncover unusual financial activity. We apply this method to all financial activity but the specific PCAOB guidance on material misstatements says:

“The auditor also should look to the requirements in paragraphs .66–.67A of AU sec. 316, Consideration of Fraud in a Financial Statement Audit, for … significant unusual transactions.”

This algorithm finds unusual activity and highlights them and we also perform this type of analysis with several different ensemble techniques. One of the nice things about the ensemble is that you’re not relying on one method, and these techniques can look at account interactions, dollar value amounts, and other outlier metrics to bring them all together.

Why human-centric AI is needed in auditing

Most audit standards today, including the international standards, were the result of years of experience in previous cases of accounting irregularities. As such, they are great at identifying the problems of the past. The limitation is that the typical rules-based system approach to finding irregularities can never identify a circumstance that is not anticipated, and this is why we should apply AI methods like those described above.

A future-looking audit practice needs to adapt to new circumstances. Every industry is changing as the result of AI adoption and the idea that we can uncover new and unusual activity, and explain why it is being flagged, is a key strength of AI systems used by forward -looking audit professionals.

This is why we need AI in auditing. In the words of John Bednarek, Executive Director of Sales Operations, Marketing & Strategic Business Development at MindBridge, “Auditors using AI will replace auditors who don’t”. The simple reason for this is auditors who leverage AI will be faster and more complete in their work, providing a better service to their clients.